Data Engineer - MRF05447

Hunter's Hub Inc.

職位描述

- Implement request for ingestion, creation, and preparation of data sources

- Develop and execute jobs to import data periodically/ (near) real-time from an external source

- Setup a streaming data source to ingest data into the platform

- Delivers data sourcing approach and data sets for analysis, with activities including data staging, ETL, data quality, and archiving

- Design a solution architecture on both On-premises and Cloud platforms to meet business, technical and user requirements

- Profile source data and validate fit-for-purpose

- Works with Delivery lead and Solution Architect to agree pragmatic means of data provision to support use cases

- Understands and documents end user usage models and requirements

- Bachelor’s degree in maths, statistics computer science, information management, finance or economics

- At least 3 years’ experience integrating data into analytical platforms using patterns like API, files, XML, json, flatfiles, Hadoop file formats, and Cloud file formats.

- Experience in ingestion technologies (e.g. sqoop, nifi, flume), processing technologies (Spark/Scala) and storage (e.g. HDFS, HBase, Hive) are essential

- Experience in designing and building data pipelines using Cloud platform solutions and native tools.

- Experience in Python, JVM-compatible languages, use of CICD tools like Jenkins, Bitbucket, Nexus, Sonarqube

- Experience in data profiling, source-target mappings, ETL development, SQL optimisation, testing and implementation.

- Expertise in streaming frameworks (Kafka/Spark Streaming/Storm) essential

- Experience managing structured and unstructured data types

- Experience in requirements engineering, solution architecture, design, and development / deployment

- Experience in creating big data or analytics IT solution

- Track record of implementing databases and data access middleware and high-volume batch and (near) real-time processing

Salvaloza Kenneth

HR OfficerHunter's Hub Inc.

活躍於三天內

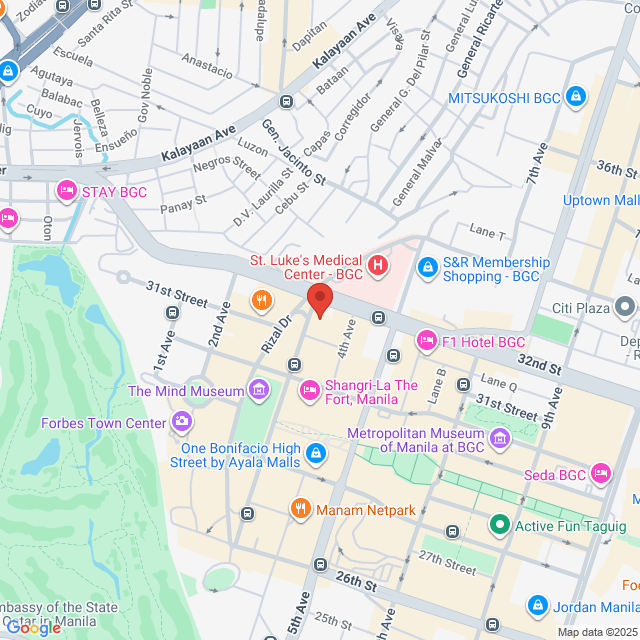

工作地址

7TH Flr, The Curve. 1630 3rd Ave, Taguig, Metro Manila, Philippines

發布於 19 March 2025

猜你想看

查看更多Senior Data Engineer Lead – APAC Region - Hybrid - Taguig

AddForce Human Resources Solution Inc.

AddForce Human Resources Solution Inc.HK$15.9-17.2K[月薪]

混合办公 - 達義5 - 10 年經驗本科全職

Rosales RobertTalent Acquisition Assistant

Senior Azure Data Engineer

Gratitude Philippines

Gratitude PhilippinesHK$11.9-15.9K[月薪]

混合办公 - 達義3 - 5 年經驗本科全職

Njoki ElizabethSenior HR Recruiter

Data Engineer

Xurpas Enterprise

Xurpas EnterpriseHK$9.3-15.9K[月薪]

混合办公 - 達義1-3 年經驗本科全職

Baliuag Jhon-MichaelTechnical Recruiter

Data Engineering Specialist

eCloudvalley Technology (Philippines), Inc.

eCloudvalley Technology (Philippines), Inc.面議

混合办公 - 達義5 - 10 年經驗本科全職

San Jose Christle Grace MarieSr. Human Resources Executive I

Data Engineer

Hunter's Hub Inc.

Hunter's Hub Inc.HK$5.3-6K[月薪]

混合办公 - 達義3 - 5 年經驗本科全職

Salvaloza KennethHR Officer